Lots of ink will probably be spilled documenting the stories of what people did during the summer of 2020. With COVID-19 spreading throughout the world and many places going into lock-down, it was an uncertain and strange time. Many people took the opportunity to catch up on old TV shows, learn about investing, or create a side hustle. Me? Well, somehow I found myself becoming the maintainer of one of my favorite open source projects.

Part 1: The Big Rewrite

Almost 4 years ago I was gearing up to rewrite a rather huge AngularJS app. I had been a bit disillusioned with Google’s decision to kill off AngularJS (don’t get me started with Angular 2), and I was looking to build an app that wouldn’t be tied to the whims of Google, since they have a tendency to shut things down. After surveying the landscape, a solution involving the open source libraries React, Redux, and Material UI seemed like a good fit. All that was left was finding a good datatable component…

mui-datatables looked clean and worked smoothly. It made my app look beautiful, and I was excited. To add to this, the official github repo seemed to be buzzing with activity. My first feature request got 12 reactions and a bunch of comments. There was clear enthusiasm about this project, and it stood out amongst all of the Material UI based datatables I evaluated. I felt like I’d found the final piece to my puzzle.

For a while everything went smoothly. I was no rush and my work had me juggling several projects, so the rewrite took place in-between other tasks I was doing. It wasn’t until I was a few months in that I realized I needed an ability that mui-datatables didn’t provide. “No worries” I thought, “I’ll just put in another feature request”, but when I checked the repo, I noticed Greg, the library’s creator, was no where to be seen. Instead, someone named Gabriel was now running the show.

At first this was fine, and I was glad to see someone had taken up the mantle and was moving the library forward, but I soon found myself increasingly frustrated. I was so close to having the library that I needed, yet many of my pull requests (PRs) were left to languish and some updates to the library made my life harder. To add to this, Gabriel wanted to rewrite the whole library, deeming its internals fundamentally flawed. He made a pinned issue announcing this big rewrite (“Version 3.0”), and said it would fix the table’s internals and possibility completely change its API. However, after this announcement he continued to work on features for version 2.x, and in updates months after this announcement he would state that he hadn’t started on version 3 and was still thinking about things.

I was concerned. Once again I looked at other datatables, but I was filled with dread when I concluded that mui-datatables was still the best fit for what I was doing. I either had to stick it out and convince Gabriel to take my PRs, or fork the library and be on my way… and well, I forked it. And that actually turned out to be a pretty freeing experience. But there are downsides to forking that cannot be ignored:

- You don’t get updates from the community – you’re on your own.

- When you eventually leave your position, you leave the next developer with learning the fork you made. I’ve been in this boat and it’s not a fun boat to be in, especially as the fork gets old and bugs are found.

After the app I had been working on launched, I thought a little more about these 2 points. One of the key reasons for the rewrite (but not the only reason) was to get off of the now archaic AngularJS framework so that future developers could easily jump into the project. But leaving someone with a forked library that was heavily modified seemed to clash with this idea.

I decided to once again check in with mui-datatables to see if there was some way we could reconcile. What I found was 60 PRs, 2 pages of unanswered issues, and someone @-ing Greg and Gabriel, asking if the project was dead. The last release was 3 months old, and it had a number of problems (a malfunctioning resizable column feature, the responsive design was broken, etc etc). After Greg and Gabriel, I was the 3rd biggest contributor to the project, and with all the updates I’d done in my fork, I realized I was probably the most qualified person to take over. To add to this, COVID-19 was spreading around the country and my work had recently closed down. I was at home with nothing to do for the foreseeable future. I needed something to take my mind off things.

I decided to reach out to Greg on Twitter to let him know the situation and ask if I could take over. I’d previously sought him out to ask why he’d left the project so I knew he’d be responsive on Twitter. A week later he handed me the keys. I then went to 2 of my oldest PRs, merged them in, and did a release. I was the new maintainer.

Part 2: mui-datatables 3.0

Gabriel briefly returned to wish me well. He told me his time had become scarce, and he simply didn’t have enough of it to spend on the project. Even though I’d felt a lot of frustration with some of his decisions, we’d been friendly and I appreciated the work he’d done. Had he not taken on the project, it most likely would have died. However, a year had passed since he’d made his announcement about the next version of the library. Was a version 3 still on the table? I’d done a lot of work on my fork and there were a lot of PRs. Maybe there was enough new stuff to justify a big release.

For the next two weeks I poured through the PRs and open issues. It was oddly fun and proved to be an eye opening experience. Some of the stuff people submit is completely nonsensical while other things are highly complex and clearly had a huge amount of work put into them. For example, one person had rewritten the whole library in TypeScript, and while this was a neat idea, it was completely impractical and would have made it almost impossible to merge the other updates in (plus, I’m not completely sold on TypeScript – but that’s another story). On the opposite end, there were some requests that were small with little explanation. Often times they didn’t work at all and/or caused the tests to fail. It was like people submitted their work without checking it.

I tried to be nice. After all, each PR represented someone spending their own free time to better this library. At the very least they deserved someone trying out and reviewing the changes that they made – even if they had to wait a year. And to my delight, people were pretty cool. I either got a positive response thanking me for looking at their PR, or no response at all.

Of the 60, I ended up accepting 23. The vast majority of these were bug fixes and minor updates. The only submission that really fell into the “cool new feature” category was one that added an injectable component feature. During this period I also ported over most of the updates from my fork, which in the end, accounted for all but 5 of the new features/API updates. A thorough review of the code base was also done which cleaned up a hand full of anomalies. For example, most of the deprecation warnings had accidentally been disabled in version 2.14.0, and starting in version 2.13.1, a large 5MB file was accidentally being included in the npm package. No one seemed to have noticed these things though.

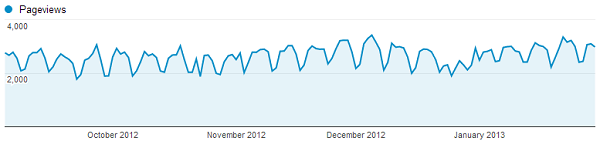

I also updated the library from using version 3 of Material UI to version 4. In the year that had passed, most of the Material UI community had moved on to version 4. Not updating the library to correctly work with version 4 had probably hurt adoption. When I had parted ways the previous year, mui-datatables had 15k downloads per week on npm and it’s closest competitor, the feature heavy material-table, had 7k a week. Now the tables had turned. Material-table was crushing it at 80k downloads a week to mui-datatable’s 25k. The version issue most definitely wasn’t the only reason mui-datatable had lost ground, but I have to imagine it was a significant factor in people’s decisions.

In the end, version 3 would be no great revelation, but it would be a step forward and hopefully a step in the right direction.

Part 3: The Rise and Fall of a Maintainer

After it was released a few people chimed in to say thanks and report bugs, but there was no big celebration that the library was back to getting updates. I got the impression that many people using the table had built their apps around it a year ago. It didn’t seem like it was attracting a ton of new users. From github stars, I could tell that on average, 1.35 new people were starring the library a day, which seemed a bit lower than it had been in the past.

To get things going again I decided to start work on two features I felt were essential:

- A cell rendering method that would significantly boost performance.

- Draggable columns.

I had a soft spot for draggable columns. It had been discussed with feverish enthusiasm when I was originally looking at the table, and I felt like it would be poetic as the first big feature of the 3.x era. I wanted something that was nice though, I didn’t want something cheap looking. So I got to work and created what I felt was a slick draggable column feature:

![]()

click to play or pause

Most people will say they don’t care about small little effects like this – that all they really want is the functionality, but over time I’ve found this to be false. Little touches like this add up, and overall lead to users liking a product more.

As I prepped a new release, I began to talk about new features with the few of the people still hanging around. Maybe a grouping feature should be next? Editable columns? I was excited. I was going to get this table back on track and soon it would have features that rivaled material-table. But in addition to the lack of activity in the repo, something else bothered me: Surely I couldn’t be the only one who felt datatables for Material UI were lacking? Hell, when I was doing AngularJS there were several great community options. What was going on?

I went digging, and found my answer on the material-ui github repo. In a thread lambasting material-table, several people stated that they weren’t happy with the community options. The co-creator of Material UI, Olivier Tassinari, satiated the criticism by ensuring them that they were hard at work on an official datatable component. It would be ready for preview in September – basically at the end of the summer.

I had been out of the loop, and though it seemed obvious, apparently the community was displeased with both mui-datatables and material-table. The creators of Material UI realized they needed an official solution, so in October of 2019 they’d announced plans to create one. That explained why no other table had come forth, and it made me feel like mui-datatables and material-table were both lame ducks. A good solution was on the way, and there was no point in a community project if an official table was going to be supported. (however, I would later learn that certain parts of this official table were for paid users only – so material-table and mui-datatables would still have a place in the future)

I was a little distraught, but decided to continue work, albeit at a slower pace. Then, after 10 weeks of being at home, I got the call to come back to work. I reintegrated mui-datatables into work my project and showed off some of the new features. My team lead seemed impressed and was thankful we were no longer using a forked project. With work on mui-datatables now restricted to evenings and weekends, my contributions to it slowed even more. Then, one day in late September, I handed in my resignation at work.

Wait, what?? Oh yeah, probably forgot to mention that during my time off I was kind of stressed about my future. My work had been extremely generous in paying us to stay home and do nothing, but there were rumors about leave without pay in the Fall. With no telework option available and no assurances made about what might happen once the leaves started turning, I had decided it was best to hedge my bets and look for another position.

In a twist of fate my new job would involve working on another AngularJS 1.x project (it never ends!) and possibly porting it to React (though as of now that hasn’t happened – my guess is we’ll still be dealing with AngularJS 1.x apps 10 years from now, though that’s a topic for another time). Now I had even less motivation to continue work on mui-datatables. I didn’t want the project to fall back into disarray, so I felt like the only reasonable thing to do was to find a successor. Thankfully during my time as maintainer another developer, Woo Dohyeong, had joined me in my quest to better the library. He was the obvious choice to take over and with Greg’s blessing, I passed the torch. After Woo made his first release I stepped back.

It was bittersweet. Part of me knew I didn’t have enough time to be a maintainer forever. It’s a job that gobbles up the extra minutes of your day and its mostly thankless. I didn’t talk too much about it above, but there were a few days where I would handle half a dozen questions, and the majority of people wouldn’t say thanks or respond, some people would even be rude. However, reviving the table and improving upon what so many others had built was rewarding. There was a sadness in stepping back, but it was for the best.

Final Thoughts

Well, I didn’t expect this to be so long, but the story (even trimmed down) turned out to be much longer than I thought it would be. I needed a place to write it down though, and if you read it, thank you for reading my story. The summer of 2020 was a crazy time, and even this bloated blog post barely scratches its surface. Hell, I didn’t even write about my bike rides through empty streets or obsession with Hollow Knight (and Animal Crossing, and Cuphead), not that those things are in any way relevant to mui-datatables, but they filled the gaps between development. Anyway, hopefully you found this entertaining or enlightening. Next time you use a piece of open source, be sure to show appreciation to the maintainer, and don’t be afraid to contribute yourself. If you have the time it can be a fun little adventure. Also, don’t get too caught up in the endless cycle of front-end rewrites. There’s a certain madness to it.

. As for the remaining 16 unlikes, I assume that most of those were people who just wanted to see the confetti animation again. These people were probably super confused by the message that popped up, and they probably thought I was some super sensitive prude or something. In hindsight, it does seem like an odd gag to put in a fine art photography website.

. As for the remaining 16 unlikes, I assume that most of those were people who just wanted to see the confetti animation again. These people were probably super confused by the message that popped up, and they probably thought I was some super sensitive prude or something. In hindsight, it does seem like an odd gag to put in a fine art photography website.